Government to Take Closer Look at Data Centers and AI Power Consumption

Randy Sukow

|

Artificial intelligence (AI) is rapidly weaving itself into modern life. You might have noticed faster automation, more frequent use of artificial voices on the telephone and greater use of generative AI to create images, videos, text and computer code. As much as you might have noticed these things, chances are you were unaware of many other AI activities.

Another way to measure how much AI is changing everything is to analyze how much electricity it takes to run those AI functions. That is one of the main purposes of a Call for Comments notice the Commerce Department’s National Telecommunications and Information Administration (NTIA) recently issued in coordination with the Department of Energy. The agencies want to examine the boom in data centers supporting AI.

Data centers tend to be large, rather nondescript buildings, usually in out-of-the-way places, that house computer servers storing and distributing large amounts of data for the Internet.

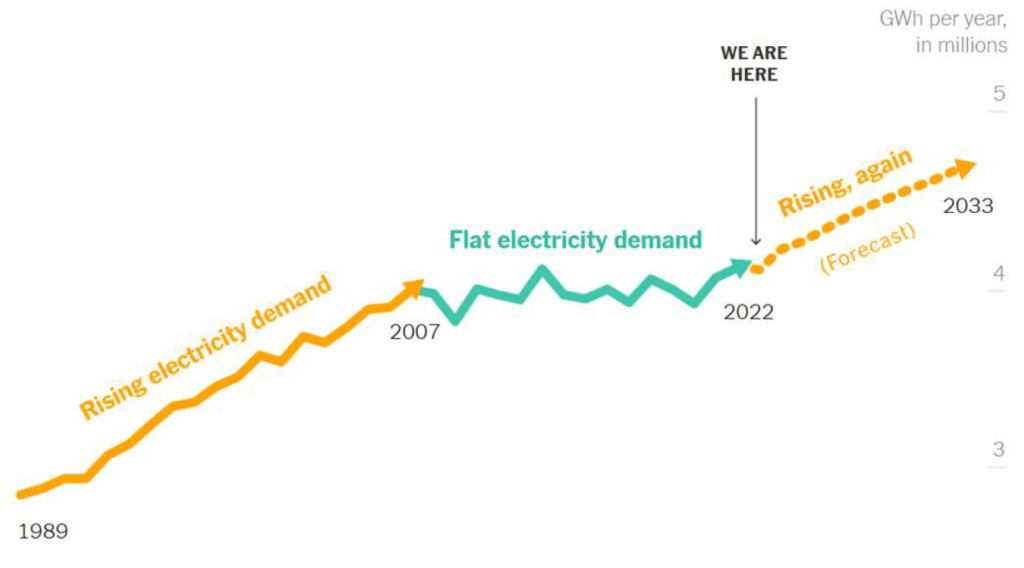

“Powering and cooling data centers is energy-intensive: data centers physically located in the United States consumed more than 4 percent of the country’s total electricity in 2022, with projections suggesting the share may increase up to 9 percent by 2030,” according to the notice. AI is driving much of that consumption.

Looking at it from a global perspective, a report from the International Energy Agency (IEA), an analysis organization with 31 member countries says, “When comparing the average electricity demand of a typical Google search [0.3 watts per hour (Wh) of electricity] to OpenAI’s ChatGPT [2.9 Wh per request], and considering 9 billion searches daily, this would require almost 10 terawatts per hour (TWh) of additional electricity in a year.”

IEA estimates that worldwide consumption from data centers in 2022 came to 460 TWh and could more than double to 1,000 TWh by 2026. “This demand is roughly equivalent to the electricity consumption of Japan,” it said.

IEA said that it also forecasts AI-related consumption by monitoring AI server sales. NVIDIA, which dominates the global AI server market, “shipped 100,000 units that consume an average of 7.3 TWh of electricity annually. By 2026, the AI industry is expected to have grown exponentially to consume at least 10 times its demand in 2023,” IEA said.

NVIDIA recently announced that it has begun testing its Grace Hopper Superchip, a processor for its servers aimed at improving affordability and operating efficiency. Among its features, the company claims, is “a 4x reduction in energy consumption and a 7x performance improvement compared with CPU-based systems.”

On Feb. 29, Tesla’s Elon Musk appeared at the 2024 Bosch Connected World Conference in Berlin, Germany, to discuss the challenges to building autonomous vehicles that employ AI for navigation. “A year ago, the shortage was chips; neural net chips,” he said. “Then, it was very easy to predict that the next shortage will be voltage step-down transformers…and the next shortage will be electricity. I think next year you will see that they just can’t find enough electricity to run all the chips.”

Comments in the NTIA/DoE proceeding are due Nov. 4. Along with the electricity question, commenters could raise the issue of whether rural sites will be feasible for new data centers. Most data centers in the United States currently are in California, Texas and Virginia, near high-tech business centers. But as AI demand grows, fiber networks to support data center connections expand and data center owners look for large tracts of land for new facilities, alternative locations might make sense for future planning.

“Data center operators have recognized that it’s easier to move gigabits of data than electrons and have entered locations where land is available and additional power is more attainable,” said Jeff Johnston, CoBank’s lead digital infrastructure economist in a May press release. “Over time, we expect new data centers will move outside of secondary markets and deeper into rural America.”

“In crowded cities, securing the massive plots required for today’s sprawling data center campuses can be incredibly challenging and expensive,” said consultant Robert Harrington of Rural Growth Strategies in a LinkedIn article. “Rural areas, in contrast, have ample open space to accommodate these sizable facilities, often at a fraction of the cost.”

Harrington also said there could be climate advantages to northern rural areas. “Locating these facilities in cooler, less humid regions can dramatically reduce the need for power-hungry air conditioning, resulting in significant energy savings,” he said.

Should small towns actively attract data centers to their communities? Will technology developers be able to create energy efficiencies to keep pace with AI demand? It appears that there will be increased focus on those and other questions in coming months.